Last week I saw claims all over the Internet about DeepSeek R1, a model that was open-source and just as strong—if not stronger—than paid options like OpenAI o1:

Source: https://arxiv.org/pdf/2501.12948

As I’m using these AI models extensively in my day-to-day job (using Cursor AI), I decided to see what will work best for me.

200 Cursor Requests later, I’m sharing the results of my experiments.

This issue is a written form of the video I made recently. If you prefer to watch instead of reading, feel free to check it out here:

The Experiment Setup

I picked the top two models from the lmarena leaderboard, i.e. Gemini-Exp-1206 and OpenAI’s o1:

As well as the contender - DeepSeek R1…

And I designed three practical coding challenges to test each model's capabilities:

Mood Tracker Web App

Calendar-based mood logging

Visual mood tracking

Data visualization with charts

Mood Tracker designed by o1

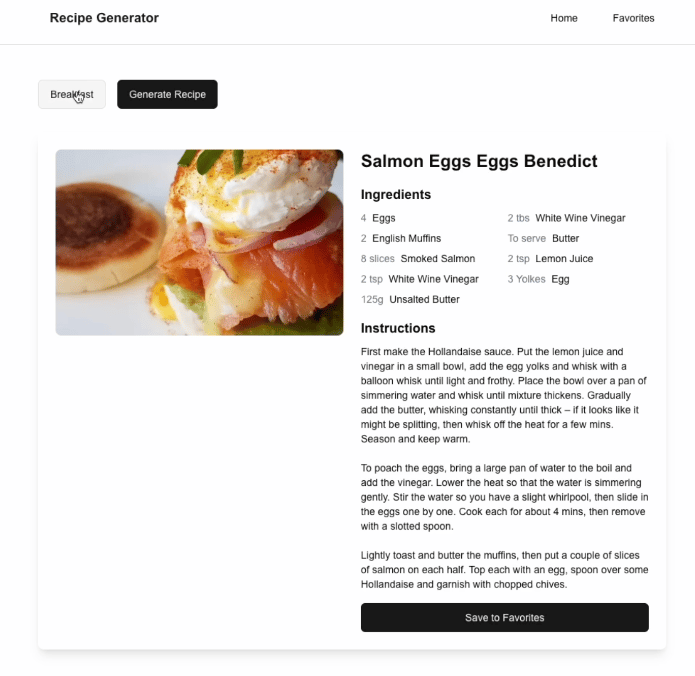

Random Recipe Generator

Integration with MealDB API

Category Filtering

Favorites management

Recipe Generator designed by DeepSeek R1

"Catch the Mole" Game

Real-time animations

Sound effects

Progressive difficulty

Catch The Mole Game designed by Gemini

How I Tested Each Model

I followed the exact same process for all three challenges:

Write a Mini Requirements Doc

I typed up a concise list of what each project should include, such as “use a calendar library,” “store data with local storage,” or “fetch data from an external API.”Ask the AI for the Complete Code

I gave the model my requirements and waited for the code. If it had bugs or missing features, I provided specific feedback (“the color-coding for the mood is broken,” etc.) until it worked.Score the Results

Once the app ran, I tested it against my checklist. Did the main features work? Was the code neatly organized? Did it look good to me as a user? I gave each model a final rating.Compare Them

After finishing all three apps with all three models, I gathered my notes, tallied the scores, and decided which one was the overall winner.

This is the flow of Code Generation

This is what an example PRD looked like:

---

name: "Mood Tracker"

about: "Modern mood tracking web app with data visualization"

date_created: "2025-01-26"

project_name: "MoodTracker"

tech_stack: ["NextJS 15", "TypeScript", "Shadcn", "Tailwind CSS", "Chart.js", "date-fns"]

version: "1.3"

---

# 🎯 Mood Tracker PRD

A modern web application for logging daily moods and visualizing emotional trends with charts.

---

## 1. **Success Criteria**

1. **Core Functionality**

- [ ] **Clickable Calendar**: Users can select a date to log or edit a mood entry.

- [ ] **Emoji & Note Input**: A modal or dialog with an emoji picker and text field.

- [ ] **Local Data Storage**: Persist mood entries between sessions.

- [ ] **Data Visualization**: At least two Chart.js charts to display weekly, monthly, or overall trends.

- [ ] **Mobile-Responsive**: Layout should adjust for smaller screens without major issues.

2. **Validation Checklist**

- [ ] **Build & Run**: Fresh `npm install && npm run dev` works without errors.

- [ ] **Calendar Interaction**: Clicking a calendar date opens the mood logging UI.

- [ ] **Color Coding**: Each date cell or icon changes based on mood score or emoji.

- [ ] **Chart Page**: A separate page or section to visualize stats (e.g., line chart + pie chart).

- [ ] **Data Persistence**: Entries remain available if the user navigates away and comes back later.

---

## 2. **Tech Stack**

- **NextJS 15** (App Router) for site structure

- **TypeScript** for type safety

- **Shadcn** UI components (dialogs, buttons, forms)

- **Tailwind CSS** for styling

- **Chart.js** for data visualization

- **date-fns** for date operations

- **localforage** (or equivalent) for local data storage

- **@emoji-mart/react** for an emoji picker

### **Why These Choices?**

- **NextJS + TypeScript**: Great for server/client flexibility and type safety

- **Shadcn + Tailwind**: Rapid UI development with consistent design

- **Chart.js**: Straightforward library for rendering charts

- **date-fns**: Lightweight date utilities

---

## 3. **Design & Mood Scores**

| MoodScore | Mood | Tailwind Color | Emoji |

|-----------|------------|----------------|-----------|

| 1 | Angry | `red-500` | 😡 |

| 2 | Sad | `orange-400` | 😞 |

| 3 | Neutral | `yellow-300` | 😐 |

| 4 | Happy | `lime-400` | 😊 |

| 5 | Ecstatic | `emerald-500` | 😄 |

> You can style each date cell background or display an icon to indicate the logged mood.

---

## 4. **User Stories**

1. **Daily Mood Logging**

- **As a user**, I want to quickly log how I feel each day so I can track my emotional journey.

- **Given** I click on a specific date

- **When** I choose an emoji and type a note

- **Then** the date on the calendar updates visually to reflect my mood

2. **Mood Analysis**

- **As a user**, I want to see a higher-level overview of my moods so I can spot trends.

- **Given** I navigate to a “Stats” page

- **When** I select a timeframe (weekly, monthly, etc.)

- **Then** I see at least two types of charts illustrating changes or distributions in my mood data

---

## 5. **Data Structures**

```typescript

export interface MoodEntry {

date: string; // e.g. "2025-01-23"

emoji: string; // e.g. "😊"

note: string;

moodScore: 1 | 2 | 3 | 4 | 5;

}

```

- Store mood entries in `lib/storage.ts` using local data storage (e.g., localforage).

- Components like `MoodCalendar` and `MoodChart` can import these entries to display logs.

### 6. File Structure

```

mood-tracker/

├── app/

│ ├── (dashboard)/

│ │ └── page.tsx # main calendar view

│ ├── stats/

│ │ └── page.tsx # charts & statistics

│ └── layout.tsx # global layout or shared UI

├── components/

│ ├── MoodCalendar.tsx

│ ├── MoodChart.tsx

│ └── EmojiPicker.tsx

├── lib/

│ ├── storage.ts

│ └── mood.ts # data types

└── styles/

└── globals.css

```

### 7. Additional Notes

- **Shadcn**: Ideal for modals (Dialog component), buttons, forms, etc.

- **Chart.js**: Use a line chart, bar chart, pie chart, or any combination to showcase data trends.

- **Optional**: You can add a hover tooltip on each calendar day to preview the note or emoji.

Results and Key Findings

Here, I will only go through the final results of the experiment. If you’re interested in the checkpoints and specific implementations, please check the whole video where I go through each code generation in detail.

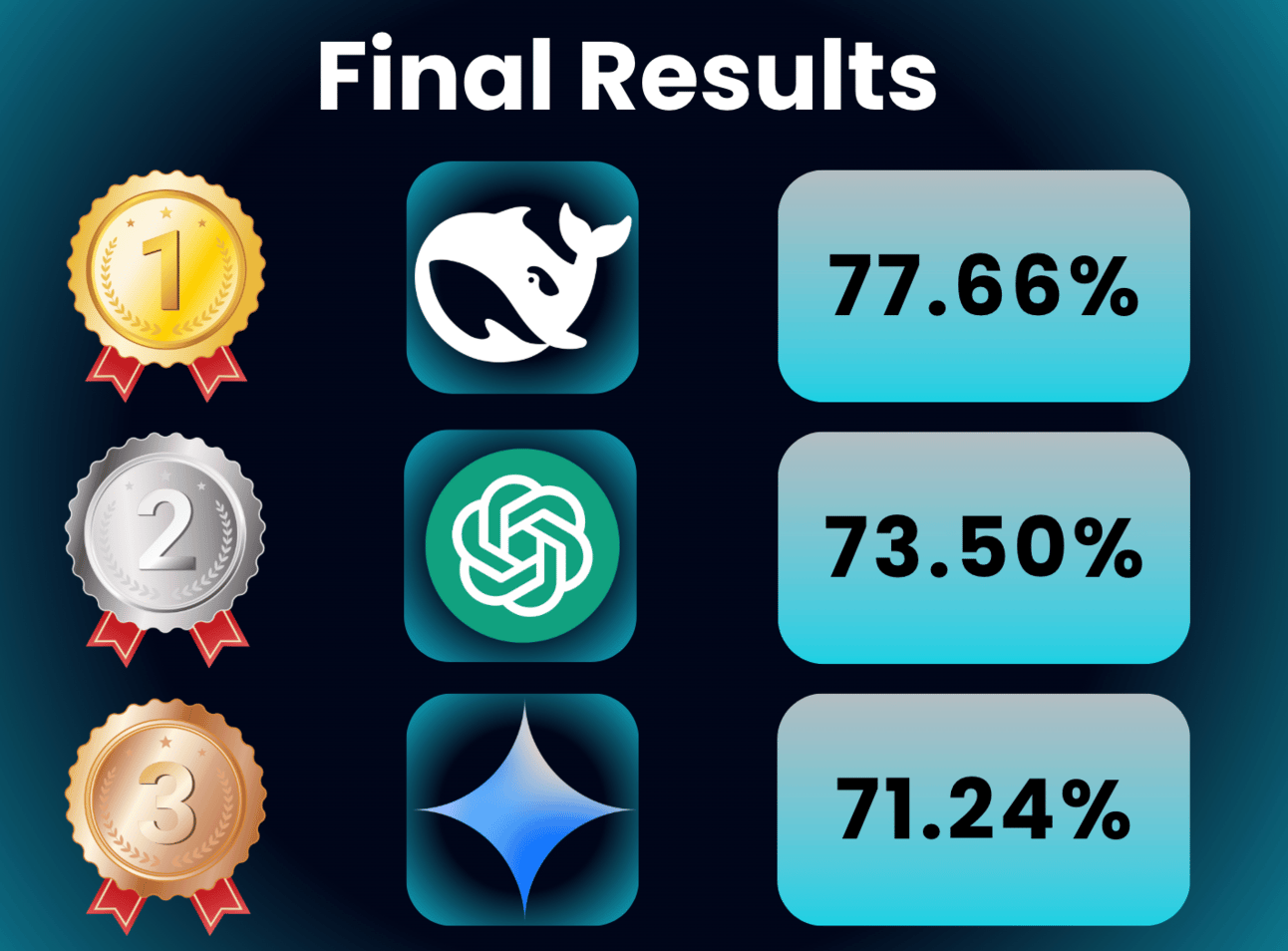

After calculating the Performance Scores (taking as the percentage of all available points), the results were as follows:

DeepSeek R1: 77.66%

OpenAI o1: 73.50%

Gemini 2.0: 71.24%

DeepSeek R1 comes out on top!

DeepSeek came out on top, but the performance of each model was decent.

That being said, I don’t see any particular model as a silver bullet - each has its pros and cons, and this is what we’ll dive into now.

Pros and Cons of Each Model

DeepSeek R1

Pros

Thinking tokens

High quality

Creative

Stable

Free

Cons

Slow (long inference time)

Often times out in Cursor AI

Sometimes overthinks when debugging

OpenAI o1

Pros

Good integration with Cursor AI

High quality

Creative

Stable

Cons

Expensive

No thinking tokens

Not as good at debugging as Claude Sonnet

Gemini 2.0

Pros

Follows instructions well

Very fast!

Free

Cons

Less creative

No thinking tokens (in Cursor)

Needs very specific instructions

Bonus Mention: Claude Sonnet 3.5

Pros

Great at debugging

Fast

Safe bet (stable and consistent)

Cons

Less creative than “reasoning” models

Conclusion

DeepSeek R1 came out with the highest score for these coding challenges at 77.66%, but OpenAI o1 and Gemini 2.0 also performed well. In practice, model selection often depends on your specific use case:

If you need speed, Gemini is lightning-fast.

If you need creative or more “human-like” responses, both DeepSeek and o1 do well.

If debugging is the top priority, Claude Sonnet is an excellent choice even though it wasn’t part of the main experiment.

No single model is a total silver bullet. It’s all about finding the right tool for the right job, considering factors like budget, tooling (Cursor AI integration), and performance needs.

Feel free to reach out with any questions or experiences you’ve had with these models—I’d love to hear your thoughts!