Meet your new assistant (who happens to be AI).

Skej is your new scheduling assistant. Whether it’s a coffee intro, a client check-in, or a last-minute reschedule, Skej is on it. Just CC Skej on your emails, and it takes care of everything:

Customize your assistant name, email, and personality

Easily manages time zones and locales

Works with Google, Outlook, Zoom, Slack, and Teams

Skej works 24/7 in over 100 languages

No apps to download or new tools to learn. You talk to Skej just like a real assistant, and Skej just… works! It’s like having a super-organized co-worker with you all day.

This Thursday, I did something that made me uncomfortable: I bought Claude Max despite all the GPT-5 hype flooding my timeline - and despite GPT-5 being 12x cheaper.

The reason? After deep diving into Claude Code router testing with different models: Horizon Beta, Qwen3-Coder, GPT-OSS, and yes, GPT-5. I discovered something most developers are missing. Claude Code has a "hidden moat" that GPT-5 can't cross, even with all its PhD-level reasoning and aggressive pricing.

Here's what I discovered during my testing marathon...

The Testing Marathon That Revealed Everything

This week, I went down a rabbit hole testing how different models perform with Claude Code's interface. Using various routing solutions and OpenRouter integration, I tried everything:

GPT-5 through Claude Code router

GPT-OSS (20B and 120B variants)

DeepSeek Reasoner

The pattern was consistent: non-Anthropic models produced terse, unhelpful responses. "Fixed the bug." "Added error handling." "Optimized the function."

Meanwhile, Claude delivered comprehensive, production-ready code with detailed explanations, exactly what developers need.

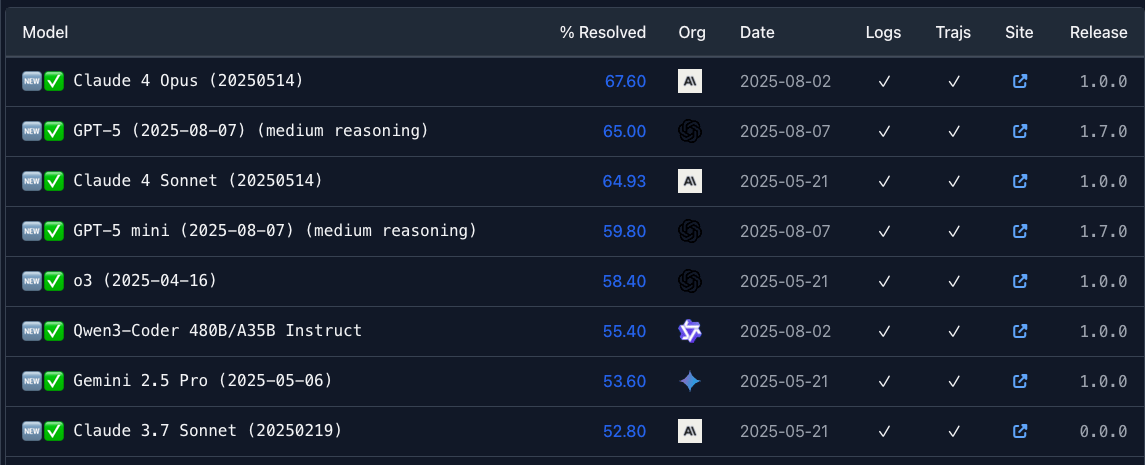

The numbers tell part of the story. Claude Opus 4.1 achieves strong performance on SWE-bench—the benchmark for real-world coding tasks—consistently outperforming competitors in practical coding scenarios. That performance gap represents hours of debugging, complete rewrites, and the difference between shipping and struggling.

GPT-5 does not beat Claude 4 Opus on SWE-bench (and there’s no Opus 4.1 in this table which scored even higher)

But here's where it gets complicated...

The Router Lottery: When Intelligence Becomes Unpredictable

Before diving into Claude's moat, let's acknowledge GPT-5's genuine strengths. As Simon Willison notes, the pricing is "aggressively competitive". At $1.25 per million input tokens, it's 12x cheaper than Claude Opus 4.1's $15/million. For many use cases, that's game-changing.

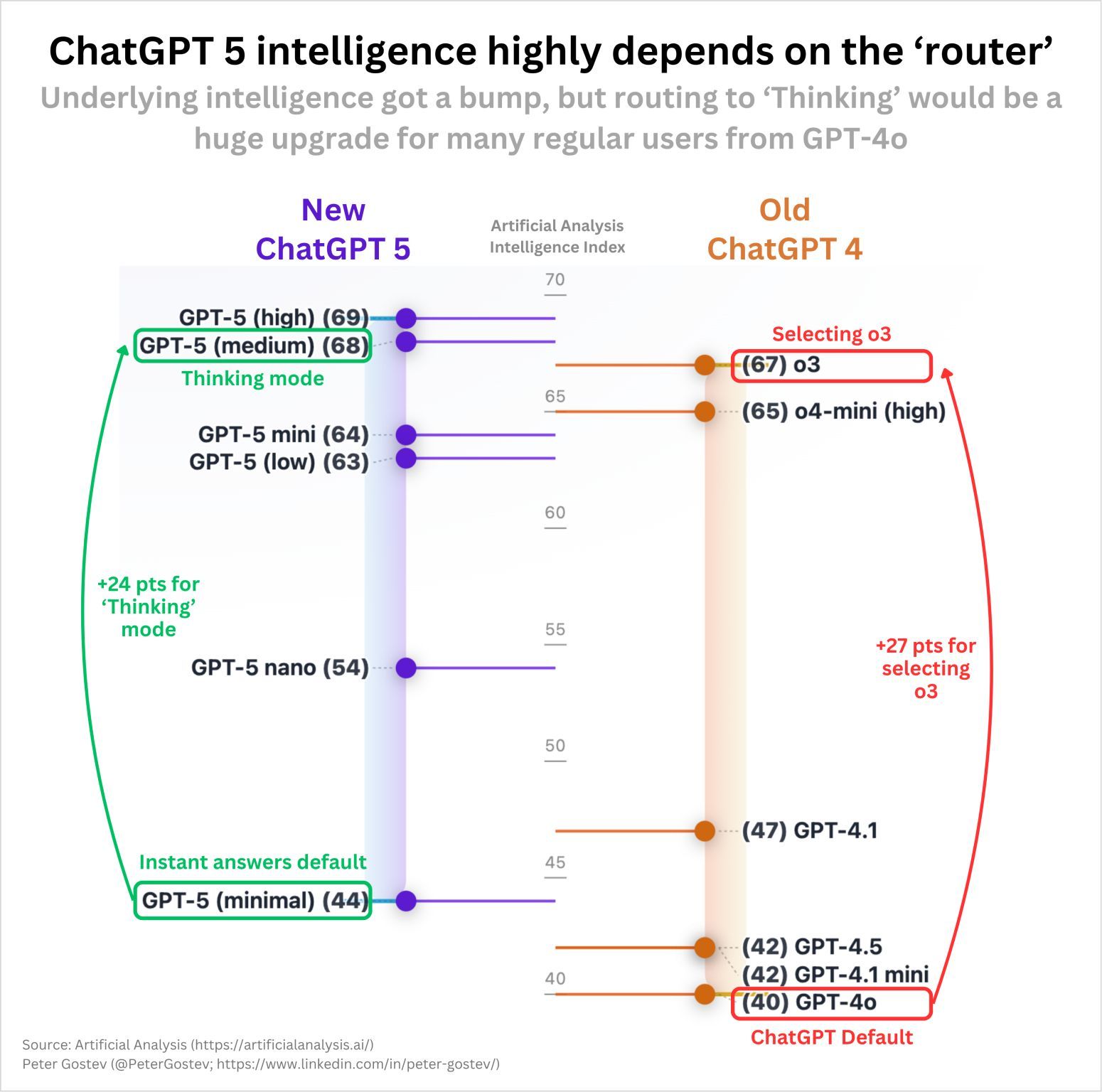

But there's a catch that Artificial Analysis revealed: GPT-5's intelligence highly depends on which model your request gets routed to. Their benchmark shows:

GPT-5 (high): Intelligence score of 69

GPT-5 (medium): Score of 68

GPT-5 (minimal): Score of 44—lower than GPT-4o!

You're essentially playing a router lottery. Pay for "Thinking mode" (+24 points) and you might get brilliance. Get routed to "minimal" for instant answers, and you're worse off than the previous generation. This variability makes GPT-5 unreliable for consistent, production-critical coding work.

Compare this to Claude: you know exactly what you're getting every time. No router roulette, no surprise downgrades.

The Prompt Engineering Moat Nobody Talks About

Here's where it gets fascinating. A deep dive into Claude Code's internals (shoutout to Yifan for really great work on this) reveals the true secret: sophisticated prompt engineering specifically tuned for Claude's neural architecture.

The key insights from this analysis:

Prompt Engineering is the "Secret Sauce": Claude Code's effectiveness isn't a hidden model, it's highly detailed prompt engineering crafted for Anthropic's models

Agentic Workflow Design: The system uses natural language workflows defined in the prompt, not hard-coded logic

Model-Specific Tuning: Instructions that work brilliantly for Claude fail catastrophically with other models

Repetition and Reinforcement: Critical instructions are repeated multiple ways throughout the prompt to ensure Claude adherence

Think of it like Formula 1 racing. GPT-5 is a luxury SUV: impressive specs, loaded with features, handles everything reasonably well, and much cheaper to run. Claude Code is an F1 car; engineered for one purpose with every component optimized for that specific track. Yes, it costs more, but when you need to win the race, price becomes secondary.

The prompts use sophisticated techniques:

XML tags for hierarchical information structure

System reminders injected contextually during conversations

Explicit tool usage examples with good/bad patterns

Task management as a core function with constant reinforcement

When GPT-5 tries to follow these Claude-optimized instructions, it's like asking a concert pianist to play a piece written specifically for a guitarist's fingering patterns. Technically possible, but something essential gets lost.

The Reality Check Developers Need

What does this mean for you?

If you're optimizing for cost, GPT-5's pricing is undeniably attractive. For general tasks, documentation, and non-critical code, it might be the smart choice.

But for serious development work where consistency and quality matter, Claude Code's optimization is worth the premium. That prompt engineering moat isn't just marketing - it's the difference between code you ship and code you rewrite.

Don't take my word for it. Here's your test:

Try the Claude Code router with different models

Test GPT-5 through OpenRouter

Compare consistency, not just peak performance

Check which produces reliably production-ready code

As models commoditize and prices race to the bottom, the winners won't have the cheapest or even smartest models - they'll have the most reliable, specialized optimization for specific use cases. It seems like we’re entering product era.

Claude Code's prompts are their moat. GPT-5 can't cross it - at any price.

That's why I'm sticking with Claude Max.

What about you? Let me know what are you thought on GPT-5 release by hitting reply!

Luke